In April 2016, premiered at the Tribeca Film Festival and made it into .

The Turning Forest is a magical sound-led VR fairytale written by Shelley Silas and directed by Oscar Raby. It was created for the and at Tribeca we also used a (a haptic vest) to enhance the audio experience. The experience took place in a magical forest installation built from acoustic blankets to reduce the noise levels.

The original production was one of three short dramas that were commissioned by the EPSRC Programme Grant S3A: (EP/L000539/1) and the 麻豆官网首页入口 as part of the . Eloise Whitmore and Edwina Pitman produced a short audio feature (above), telling the story of how The Turning Forest went from research project to film festival.

recently featured The Turning Forest after its world premiere at Tribeca. They give a great introduction to and the work that we're doing on this project and beyond. but the piece on the Turning Forest is embedded below. I also give a little more technical detail on how the 3D sound was created.

There has been a lot of discussion about the importance of sound in ual reality this year. There are now tools available for creating and distributing with dynamic binaural sound i.e. headphone sound that gives a impression and updates according to your orientation. With the Turning Forest VR project our aim was to demonstrate the impact that high-quality 3D sound production can make in virtual reality content. To achieve this we built two major components of our audio research work into a production workflow for VR, dynamic binaural rendering and the .

Our binaural production system, previously used to create the radio dramas, was used to make a broadcast quality sound mix for headphones, using real-time tracking to adapt the 3D audio scene to the listener鈥檚 orientation. It was integrated with a synchronised 360藲 video viewer to allow for spatial alignment of visual and sound sources, as previously used on the .

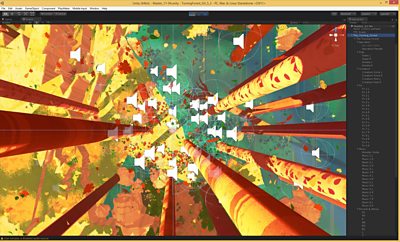

The big difference between this and previous projects was that this was not just 360藲 video production but virtual reality, where a 3D world was created using computer graphics and the listener could move within the scene (within a limited range). We created the audio first and then commissioned the wonderful and his to help us to turn it into a VR experience. Therefore we needed a workflow that allowed them to build an interactive visual world around our 3D sound scene. Using the , we could export the audio sources and their dynamic position data into a single WAV file from the binaural production system.

Our software for binaural rendering and handling the Audio Definition Model was then built into plug-ins for the , which was used by VRTOV to produce the graphical content. So we could export our complete object-based 3D audio mix from the audio workstation to the game engine via a single file.

One additional trick that we used for the installation at the was to add a low frequency effects signal through a device called a SubPac, a backpack that translates the LFE into body vibrations. This shook the listener with the footsteps of the creature in the forest, which was fun.

We plan to give more details on these tools and the workflow in a technical paper in the future. There was obviously a lot learned during this process which can be improved upon with further development, but we feel it allowed us to create a rich and immersive sound scene that greatly enhanced the virtual reality experience.

The Turning Forest VR would not have been possible without the excellent work of a large team of talented audio engineers:

麻豆官网首页入口 R&D DevelopersRichard TaylorRichard DayTom Nixon

S3A ResearchersJames WoodcockAndreas FranckPhil ColemanDylan Menzies

Sound Production TeamEloise WhitmoreTom ParnellSteven MarshBen YoungPaul Cargill

Head of 麻豆官网首页入口 R&D Audio TeamFrank Melchior

The original production was one of three short dramas that were commissioned by the EPSRC Programme Grant (EP/L000539/1) and the 麻豆官网首页入口 as part of the 麻豆官网首页入口 Audio Research Partnership. They have already been used in several research studies. The content itself is available in object-based Audio Definition Model WAV files from the and a paper discussing the production was presented at the in Paris.

- -

- More on Virtual Reality and 360 Video:

- from day

- 麻豆官网首页入口 R&D - 360 Video & Virtual Reality

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.