As the 2019 �鶹������ҳ��� Proms season begins, �鶹������ҳ��� Radio 3 will once again create immersive, binaural mixes of many concerts throughout the season. This year, they will be produced by �鶹������ҳ��� sound engineers using bespoke mixing tools from �鶹������ҳ��� Research & Development.

The 2019 Proms season runs from the 19th of July to the 14th of September and features more than 80 concerts. Since 2016, �鶹������ҳ��� R&D engineers have produced binaural mixes of a selection of Proms concerts alongside the usual stereo mix. When using headphones, binaural audio is able to evoke the perception of sounds coming from all around the listener in three dimensions, unlike a traditional stereo mix which can often sound like it’s in your head. We believe that this delivers a more immersive experience to the listener, and therefore binaural audio forms part of the strategic plan for the Immersive and Interactive Content section of R&D. The binaural mixes produced at the Proms this year will be available via the �鶹������ҳ��� Proms website which will be updated as and when new mixes become available. Some of the mixes from 2018 are still available to listen to.

- �鶹������ҳ��� Proms - 2019 Binaural Mixes

- �鶹������ҳ��� Proms - 2018 Binaural Mixes

- �鶹������ҳ��� R&D - Binaural Sound

In 2016 we described the layout of microphones used for creating the binaural mixes of the Proms. Most of these microphones contributed to Radio 3’s stereo mix as well as the binaural mix. The microphone layout has remained largely unchanged since 2016, as it has proven to be well suited to creating quality binaural mixes. , revealing how the binaural mixes took feeds from the same mixing desk used to produce the stereo mix. There are several reasons for this, but fundamentally this minimises the extra work required to produce a binaural mix in addition to the broadcast stereo mix. This is vitally important given the already high workload to produce mixes of the Proms, and so this method continues to be used.

Although the microphone layout and signal routing used to produce these binaural mixes has remained the same, the production tools have not. Originally, commercially available software was used. However, this proved to be quite cumbersome to use in this environment, with over 120 incoming feeds! Therefore in the lead up to the 2018 Proms, we began to seek a solution to this problem. Through discussions with �鶹������ҳ��� sound engineers who had produced these binaural mixes, we were able to determine the core issues with the current workflow and draw up a list of requirements for an ‘ideal’ tool for large scale productions such as the Proms. Of these, the major requirements were:

- Live binaural rendering

- Capable of handling up to 128 microphone feeds

- Ability to ‘group’ feeds together

- Gain/trim, delay and 3D position controls for feeds

- Minimalist user interface with optimal use of screen space

- Provide monitoring facilities

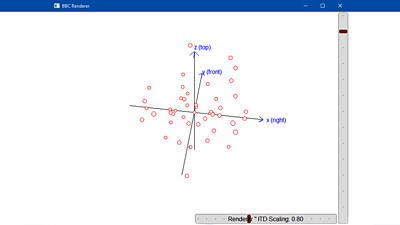

We conducted research into commercially available software to determine if there was an existing tool better suited to the task. However, most tools currently available seem targeted at post-production, and nothing quite met all of the requirements for live production at the Proms. The best option therefore was to develop our own software. It was decided that the new tool would be based around the R&D developed ‘�鶹������ҳ��� Renderer’ application since this was mature software that already provided most of the signal processing functionality we would need. It is essentially a configurable audio renderer application capable of rendering sources to a variety of formats, including binaural.

The �鶹������ҳ��� Renderer began development in 2014 and has been used for many of our spatial audio projects, such as our work on the binaural Doctor Who episode, 'Knock Knock'. It accepts Open Sound Control (OSC) messages as a means to control the behaviour of the renderer, and so a new, separate ‘control interface’ application was developed to generate these messages according to user input. The design of the control interface has been aimed specifically at the Proms and other large scale live productions. The only additional development required on the renderer software itself was support for a monitoring bus and additional delays on feeds. The monitoring bus allows engineers to listen to just a select subset of sources without affecting the main mix, just as they could on a traditional mixing desk. Controllable delays allow engineers to time-align feeds to create a coherent mix and avoid any unintentional echo-like effects or filtering caused by misalignment of feeds. The software was completed by July 2018 and was subsequently used to produce the binaural mixes of the 2018 Proms.

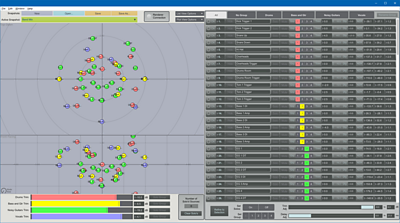

In designing the control interface, R&D engineers collaborated with sound engineers working on the Proms. A simple wireframe user-interface design was agreed upon. A key feature of this design was that a large portion of the screen space would be dedicated to a positioning control which would display the locations of all ‘sources’ (feeds from microphones) in a single, intuitive display. This would also provide the ability to control source positions in three dimensions using ‘drag-and-drop’ behaviour. Another large area of the screen is used to display a list of sources, with each source occupying a narrow strip to reduce the need to scroll. Each strip provides parameter controls for the source, such as position, level trim and delay. They also include a control to assign the source to a group. The gain of all sources in a group can be adjusted collectively using group trim controls.

You may notice that the design of the control interface is very minimalist - and intentionally so. This is to reduce complexity for the operator and consequently improve ease-of-use and minimise the possibility for mistakes. Any features that are not completely necessary are not implemented. For example, by taking feeds via the mixing desk used for the stereo mix, the feeds are already pre-processed in terms of equalisation (tone) and dynamics (level variation). Therefore the software does not include these features. Of course, this does assume that no additional processing is required beyond that applied to the feeds for the stereo mix, but in our experience, this has been sufficient to produce high quality binaural mixes. On a similar note, user feedback on the original version of the control interface used during the 2018 Proms revealed that some controls were very rarely used. Consequently these have been removed from the main window in the latest version of the software for the 2019 Proms. Instead they are now accessible via menus to further declutter the user interface. Additional improvements have also been implemented based on user feedback from the 2018 Proms.

Audio objects! Virtual binaural for the Proms courtesy of �鶹������ҳ��� R&D.

— paul morgan (@pauldmorgan) July 20, 2019

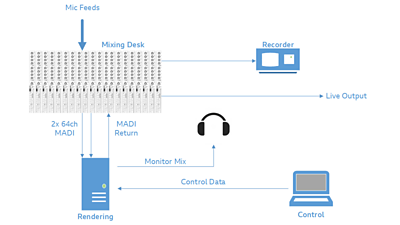

As mentioned previously, the tool is comprised of two separate pieces of software; the renderer and the control interface, and these pieces of software communicate with each other using OSC. Since we can pass OSC messages over a network, this means that the control interface doesn’t necessarily need to run on the same machine as the renderer, offering more flexibility with equipment set up. The diagram below illustrates a typical equipment layout for a large scale live production.

All microphones enter the mixing desk via their usual routes. At the Proms, this is via a Stagetec Nexus audio network distributed around London’s , and terminating in Sound 3 - the radio outside broadcast truck. The desk passes these feeds to the Rendering machine over two MADI (Multichannel Audio Digital Interface) connections. MADI is capable of sending up to 64 channels of audio over a single coaxial or fibre-optic cable and so we employ two incoming MADI connections for the Proms. The feeds are sent from the desk ‘post-fade’, essentially meaning that the feed is taken from the desks internal signal chain just after the fader, which is usually the last point within the signal chain of an individual channel. This means that each feed has already passed through the desks equalisation and dynamics processes before being sent out over MADI. Meanwhile, the Control machine is sending control data to the Rendering machine as OSC messages. The rendering software processes the incoming audio feeds according to the control data, applying the appropriate gain, delay and binaural filter for each feed’s given 3D position to produce an overall output mix. In fact two output mixes are produced; the monitoring mix, allowing the engineer to listen to any selection of feeds they want (solos), and the main mix (the mix intended for the audience) which is completely unaffected by any monitoring controls. The main mix returns to the desk over an outgoing MADI connection. From there it can be fed to downstream equipment (for example, a recorder and/or sent as a live output via a distribution/transmission chain).

The tool is not only aimed at the Proms. Currently the tool is also being used to create binaural mixes from the �鶹������ҳ��� Philharmonic’s Bridgewater Hall concert season in Manchester. Our intention in the near future is to roll out to the wider �鶹������ҳ���. This year will be the first time that the binaural mixes of the Proms will be produced by �鶹������ҳ��� sound engineers without R&D engineers alongside. This has been aided by the introduction of a , the content of which has been guided by R&D. Making the tool available to the wider �鶹������ҳ��� will facilitate production of binaural mixes from a broader range of live content. Although the tool can be used for non-live and post-produced content, there are other existing tools better suited to these scenarios and so this is not a target application for our tool - the focus will remain on live production. In the coming months, is likely that the tool will also receive some updates based on feedback from operators at the Proms this year and also based on other potential use cases within the wider �鶹������ҳ���.

- -

- �鶹������ҳ��� R&D - Datasets for assessing spatial audio systems

- �鶹������ҳ��� R&D - Spatial Audio for Broadcast

- �鶹������ҳ��� R&D - Surround Sound with Height

- Immersive Audio Training and Skills from the �鶹������ҳ��� Academy including:

- Sound Bites - An Immersive Masterclass

- Sounds Amazing - audio gurus share tips

-

Immersive and Interactive Content section

IIC section is a group of around 25 researchers, investigating ways of capturing and creating new kinds of audio-visual content, with a particular focus on immersion and interactivity.